Databricks Performance Optimization

Cut Costs and Boost Performance with Push-Down Processing

July 29, 2025

If you're using Databricks for your data pipelines, you know that compute costs can quickly balloon if transformations happen outside the platform or rely on inefficient orchestration. You pay for Databricks performance, but many pipelines still grind through slow transformations in external services or spin up costly clusters for short jobs. This challenge highlights the need for cost-aware orchestration strategies that optimize resource utilization across the entire data pipeline architecture.

This results in wasted resources and longer runtimes that inflate your cloud bills.

This article explains how Databricks push-down processing pushes transformation logic closer to the data inside Databricks itself.

By doing so, you can unlock better performance, reduce runtime costs dramatically, and simplify your pipeline architecture. If your current pipelines pull data across multiple layers instead of processing it where it lives, this article is for you.

The Databricks Cost Optimization Challenge: Why Traditional Pipelines Fail

Many data teams integrate Databricks with Azure Data Factory to orchestrate ETL pipelines. In this setup, data is extracted using Data Factory pipelines and then processed inside Databricks notebooks. While this approach works, it often introduces several inefficiencies:

- Latency from orchestration overhead: Pipelines call notebooks which spin up job clusters for each run. This cluster startup time adds latency.

- Excessive compute usage: Clusters sized for peak workloads run for extended periods to accommodate notebook execution, driving up DBU (Databricks Unit) consumption.

- Complex pipeline dependencies: Multiple stages across services increase operational complexity and make monitoring and troubleshooting harder.

Azure Data Factory Databricks Integration Bottlenecks

Azure Data Factory (ADF) pipelines often invoke Databricks notebooks as activities. This means each transformation step triggers a notebook run, which requires spinning up a job cluster unless a long-running cluster is maintained. The cluster startup and teardown overhead is non-trivial and can cause pipelines to run slower than necessary.

In addition, managing incremental loads and data consistency across these orchestrated steps can be complex. For example, high watermark tracking and change data capture must be carefully implemented in both ADF and Databricks notebooks, increasing development and maintenance effort.

Because of these bottlenecks, pipelines often run longer and consume more resources than needed, resulting in wasted cloud spend and missed opportunities for performance gains.

What Is Databricks Push-Down Processing for Pipeline Performance?

Databricks push-down processing is a method where the transformation logic is pushed directly into the Databricks compute layer instead of being orchestrated externally. This means that instead of Azure Data Factory pipelines calling Databricks notebooks sequentially, the entire transformation workflow runs natively within Databricks as a job or workflow.

This approach eliminates the overhead of spinning up multiple job clusters and reduces the latency between pipeline steps. The processing happens closer to the data, enabling faster execution and better resource utilization.

Databricks Serverless Compute Advantages in Push-Down Architecture

One of the major benefits of push-down processing is the ability to use Databricks serverless compute clusters efficiently. Serverless compute abstracts cluster management and billing to only what is consumed during the job run. This model reduces costs significantly compared to dedicated clusters that run continuously or for long periods.

For example, a data pipeline that previously took two hours on a large 50 DBU per hour cluster was reprocessed in under 30 minutes using push-down processing on a serverless cluster consuming roughly 15 DBUs per hour.

This represents a 75% reduction in runtime costs, a massive saving for any organization.

Databricks Job Optimization Through Direct Workflow Execution

Push-down processing also allows pipeline workflows to be defined as Databricks jobs directly. Instead of managing multiple notebook activities from Azure Data Factory, you can create Databricks workflows that:

- Run transformation logic in dependency order within Databricks

- Handle incremental and restartable loads efficiently with metadata control

- Include built-in logic for cleanup, file movement, and logging

This leads to better job optimization by reducing orchestration overhead and improving fault tolerance. The workflow can resume from the failed step instead of reprocessing everything, saving time and compute.

Implementing Push-Down Processing: Databricks Performance Optimization Strategy

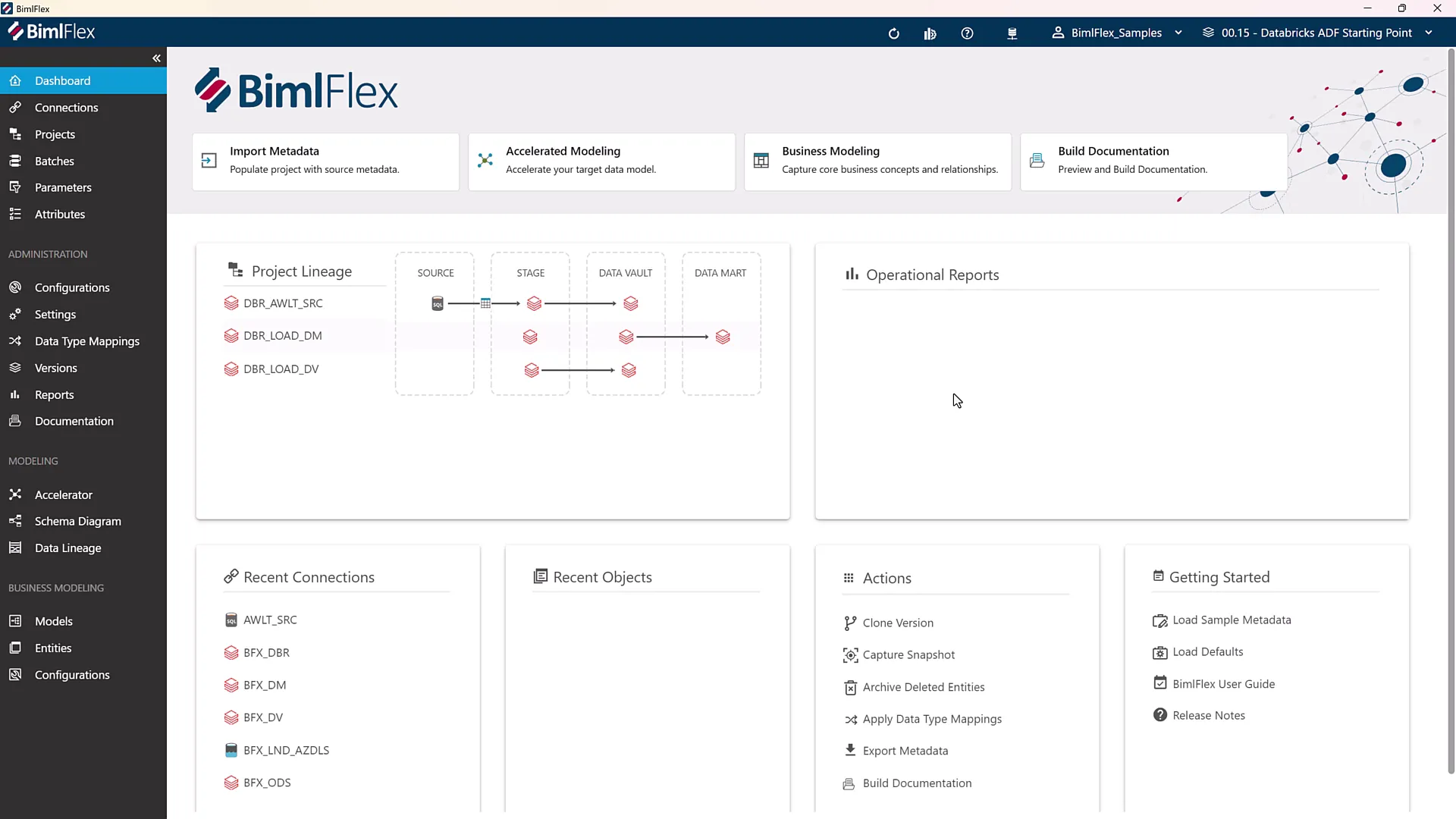

Implementing push-down processing requires a metadata-driven approach that generates pipeline artifacts automatically. This reduces manual coding effort and ensures consistency across environments.

Metadata-Driven Pipeline Generation for Databricks Cost Optimization

Using a metadata-driven framework, you start by loading metadata that describes your data sources, layers, and transformation rules. The architecture typically follows lakehouse design principles with defined layers:

- Landing layer (Bronze): Raw ingestion of source data

- Staging layer (Bronze): Data cleansing and quality transformations

- Datavault or Dataware layer (Silver): Integration and historization of data

- Data mart or Gold layer: Business-ready, curated datasets

Each layer supports modular architecture and clear data boundaries, simplifying governance and auditability. Organizations implementing Data Vault patterns can benefit from Data Vault acceleration techniques that automate the complex modeling required at the Silver layer.

Automated Schema Management and Import Workflows

Schema import automation is a critical enabler of metadata-driven pipelines. You can connect to relational databases, flat files, or parquet files and import metadata automatically. This process generates schema diagrams and allows you to review and modify relationships or overrides as needed.

Automated metadata extraction reduces development time, improves consistency, and makes onboarding new systems faster and less error-prone.

Advanced Databricks Pipeline Performance: Workflow Architecture and Execution

Databricks Cluster Optimization with Serverless Computing

Optimizing clusters is key to cost control. Push-down processing leverages serverless clusters where possible, minimizing idle time and scaling compute resources precisely to workload demands. This contrasts with traditional pipelines that rely on large, always-on clusters.

In the push-down model, workflows run on job clusters that are spun up only for the duration of the job. This dynamic scaling reduces DBU consumption and lowers costs without sacrificing performance.

Handling Incremental and Full Load Processing

Data ingestion can vary between full historical loads and incremental (delta) loads. Push-down processing supports separate notebooks optimized for each:

- Full loads: Efficiently ingest millions of historical rows without delta detection overhead

- Delta loads: Handle change detection and incremental updates for ongoing data ingestion

This flexibility allows pipelines to be tuned for performance based on the ingestion pattern, further improving overall pipeline efficiency.

Azure Data Factory Databricks Integration: Best Practices for Push-Down Processing

CI/CD Pipeline Integration for Databricks Job Optimization

Automation plays a vital role in maintaining optimized Databricks pipelines. Using tools like PowerShell scripts and Azure DevOps pipelines, you can automate the build and deployment of pipeline artifacts, including notebooks and workflows.

This automation ensures rapid deployment, traceability, and minimal manual intervention. For comprehensive approaches to automation in Databricks environments, streamlining Databricks solutions with BimlFlex data automation provides the framework for end-to-end pipeline optimization and cost control.

Monitoring and Maintenance in Optimized Databricks Workflows

Push-down processing workflows include built-in logic for logging, error handling, and cleanup. Jobs track metadata to determine what data needs processing and support restarting from failed steps rather than reprocessing entire datasets.

This intelligent orchestration enhances pipeline resilience and reduces downtime, particularly for large enterprise data loads where failures can be costly.

Measuring Success: Databricks Cost Optimization ROI and Performance Gains

Implementation Roadmap for Databricks Performance Optimization

Organizations adopting push-down processing can expect to:

- Reduce runtime costs by up to 75% by leveraging serverless compute and minimizing orchestration overhead

- Shorten pipeline runtimes significantly, improving data freshness and agility

- Simplify pipeline architecture with fewer external dependencies and streamlined job workflows

- Gain better control over incremental data processing and fault tolerance

The transition involves configuring metadata-driven pipelines, enabling push-down processing options, automating builds with CI/CD, and updating orchestration to use Databricks jobs instead of notebook activities.

Getting Started with Push-Down Processing in Your Environment

To begin, load your source metadata and configure your lakehouse layers according to your architecture. Enable push-down processing in your metadata-driven pipeline tool to generate Databricks workflows directly.

Integrate with your existing Azure Data Factory pipelines by replacing notebook activities with Databricks job activities that invoke the workflows. Automate builds and deployments with scripts and CI/CD pipelines to maintain consistency and speed.

Monitor job runs for performance and cost metrics, and fine-tune cluster configurations as needed. Over time, you’ll see substantial improvements in Databricks pipeline performance and cost optimization.

Conclusion

If your data pipelines still rely heavily on external orchestration and notebook activities to process data in Databricks, you’re likely overpaying for compute and waiting longer than necessary for results.

Databricks push-down processing moves the transformation logic directly into the Databricks compute layer, eliminating unnecessary overhead and unlocking significant cost savings and performance improvements.

By adopting a metadata-driven approach and leveraging serverless compute, you can reduce runtimes by up to 75% and simplify your pipeline architecture. Automation through CI/CD pipelines ensures rapid deployment and operational efficiency, while built-in job orchestration features improve fault tolerance and incremental processing.

Optimizing your Databricks workflows with push-down processing is a strategic move to get the most out of your Databricks investment.

It’s time to process data where it lives (inside Databricks!) and stop wasting resources on slow, costly transformations outside the platform.

Schedule a demo today if you are ready to reduce your Databricks runtime costs.