Varigence Blog

Upcoming Business Modeling webinars

We believe Business Modeling is a feature that is core to delivering lasting and manageable data solutions. To promote, explain and discuss this feature -and the planned improvements- we have organized a number of webinars.

If this is of interest, please register now for the first two webinars. These presentations will focus on the benefits of creating a business model, the structured approach to do so, how to map data to the business model, and much more.

Each webinar has US (EST) and EU/AUS (CET) time slots. The sessions will be presented live and recordings will also be made available afterwards.

More details here.

Dev Diary - Customizing file paths for Delta Lake

The Delta Lake storage layer offers interesting opportunities to use your Azure Data Lake Gen2 storage account in ways that are similar to using a database. One of the drivers for BimlFlex to support Azure Data Factory (ADF) Mapping Data Flows ('data flows') is to be able to offer a 'lakehouse' style architecture for your data solution, using Delta Lake.

BimlFlex supports delivery a Delta Lake based solution by using the 'Mapping Data Flows' integration template (currently in preview). This integration template directs the BimlFlex engine to deliver the output of the design metadata as native data flows. These can then be directly deployed to Azure Data Factory.

In BimlFlex, configuring the solution design for Delta Lake works the same way as using any other supported connection. The difference is that the connection maps to an Azure Data Lake Gen2 Linked Service. The container specified in the connection refers to the root blob container in the data lake resource.

Recently, we have added additional flexibility to configure exactly where the files are placed inside this container. This is important, because directories/folders act as 'tables' in Delta Lake if you were to compare it to a database.

So, even though you may still configure different connections at a logical level in BimlFlex (e.g. to indicate separate layers in the design), they can now point to the same container, using separate directories to keep the data apart. You can have your Staging Area and Persistent Staging Area in the same container, in different directories. Or you can place these in the same container and directory using prefixes to identify each layer. And you can now add your Data Vault and Dimensional Model to the same container as well.

This way, it is now supported to define where your 'tables' are created in your Delta Lake lakehouse design, using BimlFlex.

Dev Diary - Configuring load windows and filters for Mapping Data Flows

When developing a data solution, it is often a requirement to be able to select data differentials ('deltas') when receiving data from the operational systems that act as the data 'source'.

This way, not all data is loaded every time a given source object (table, or file etc.) is processed. Instead, data can be selected from a certain pointer, or value, onwards. The highest value that is identified in the resulting data set selection can be maintained in the data logistics control framework. It is saved in the repository, so that the next time the data logistics process starts, the data can be selected from this value onwards again.

This is sometimes referred to as a high water mark for data selection. Every time a new data differential is loaded based on the previously known high water mark, a new (higher) water mark is recorded. This assumes there is any data available for the selection, otherwise the high water mark remains as it was. The process is up-to-date in this case. There is nothing to do until new data changes arrive.

The range between the most recent high water mark and the highest value of the incoming data set can be referred to as the load window. The load window, as combination of a from- and to value, is used to filter the source data set.

To implement this mechanism, the data logistics process first retrieves the most recent high water mark from the repository (the 'from' value). Then, the data source is queried to retrieve the current highest possible value (the 'to' value). The 'from' and 'to' values define the load window, and are added to the selection logic that connects to the data source.

If the 'from' and 'to' values are the same, there is no new data to process. The selection can be skipped. In this scenario, there also is no need to update the high water mark for the next run. If the data retrieval was to be executed, it would not return data anyway.

When the 'to' value is different, this is updated in the repository.

Configuring load windows in BimlFlex

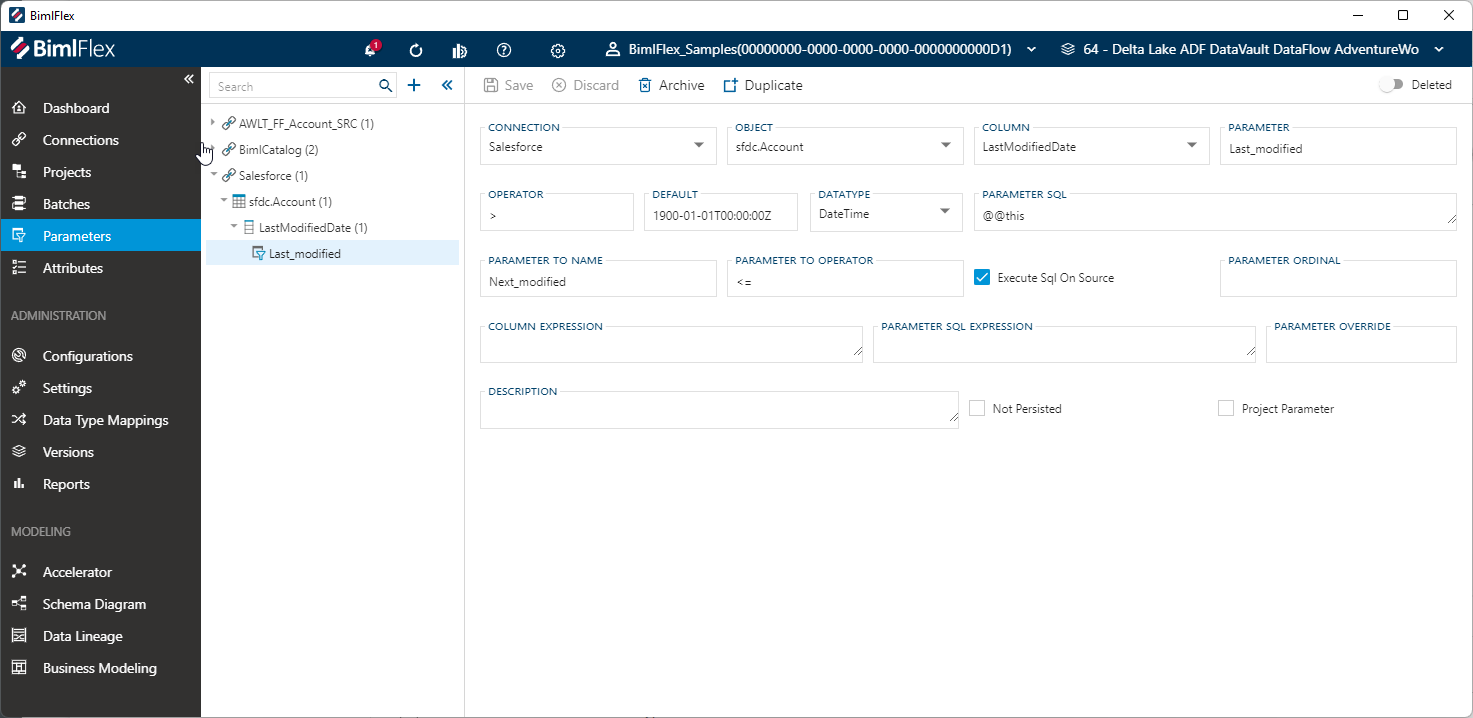

In BimlFlex, this mechanism can be implemented using parameters. Parameters are essentially filters that can be used to limit the data selection for the object that they apply to.

A parameter can have a 'from' and a 'to' component, or only a 'from' one. If both a specified, BimlFlex will treat this as a load window. If only the 'from' part is specified it will be treated as a filter condition.

In the example below, the Salesforce 'Account' object has a parameter defined for the 'LastModifiedDate' column. The 'from' name of this value is 'Last_modified', and the 'to' name is 'Next_modified'.

Because Salesforce is an unsupported data source in Mapping Data Flows ('data flows'), the data logistics that is generated from this design metadata will include a Copy Activity to first 'land' the data. Because this is the earliest opportunity to apply the load window filter, this is done here. Driven by the parameter configuration, the query that will retrieve the data will include a WHERE predicate.

In addition, BimlFlex will generate 'lookup' procedures that retrieve, and set, the high water mark value using the naming specified in the parameter.

Direct filtering in Mapping Data Flows

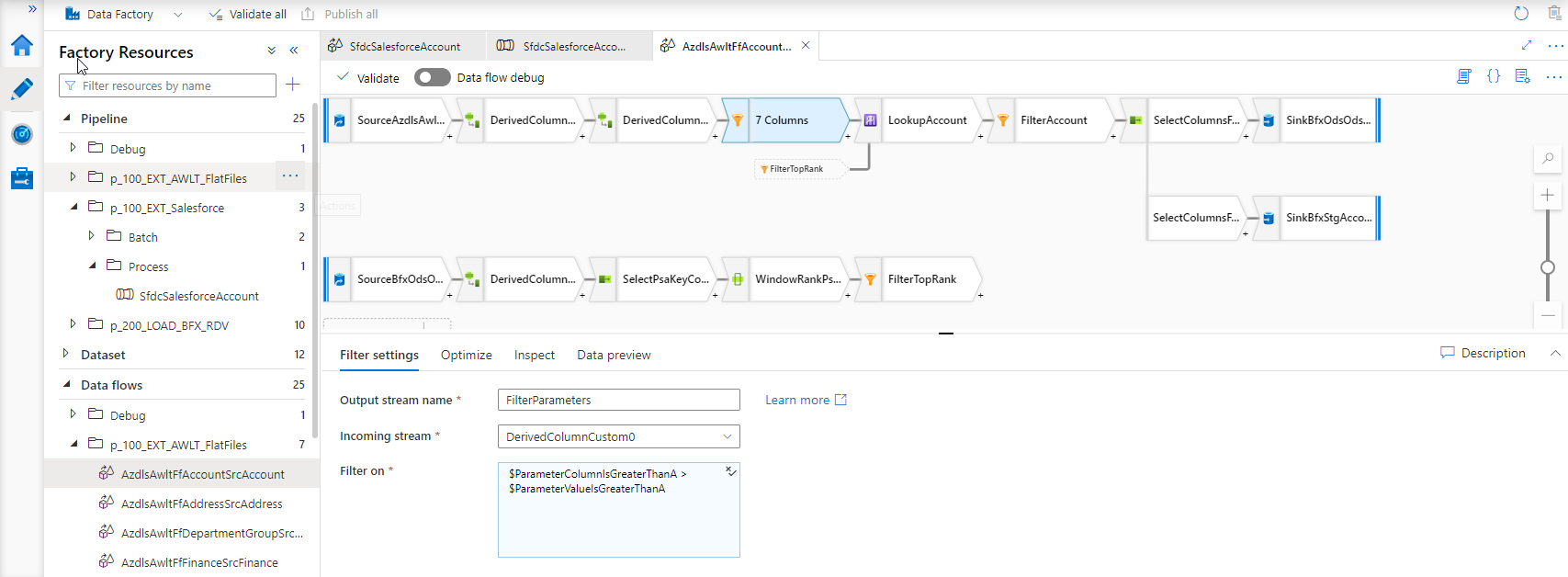

In regular loading processes, where the data flow can directly connect to the data source, the Copy Activity is not generated. In this case, the filtering is applied as early as possible in the data flow process. When this can be done depends on the involved technologies.

For example, using a database source it is possible to apply this to the SQL SELECT statement. When using inline processing for Delta Lake or file-based sources this is done using a Filter activity.

For example, as displayed in this screenshot:

.

.

True to the concepts outlined in earlier blog posts, the BimlFlex parameters are themselves defined as Mapping Data Flow parameters to allow for maximum flexibility. The executing ADF pipeline will provide these to the data flow. The parameter values are passed down into the data flow.

Next steps

At the time of writing, database sources have not been optimized to push the selection to the data source as a WHERE clause. So, the SELECT statement is not yet created for database sources to accommodate filtering here - which is conceptually the most performant outcome. However, the filtering as per the above screenshot already works so, functionally, the output is the same.

It is equally possible to now define load windows on file-based data sources, and this is also an area to investigate further. Using data flows, the BimlFlex parameter concept for generating load windows is not restricted to database sources any longer.

Dev Diary - Connecting to unsupported data sources using Mapping Data Flows and BimlFlex

When configuring BimlFlex to use Mapping Data Flows ('data flows'), the generated data flow will in principle directly connect to the configured Source Connection and process the data according to further configurations and settings.

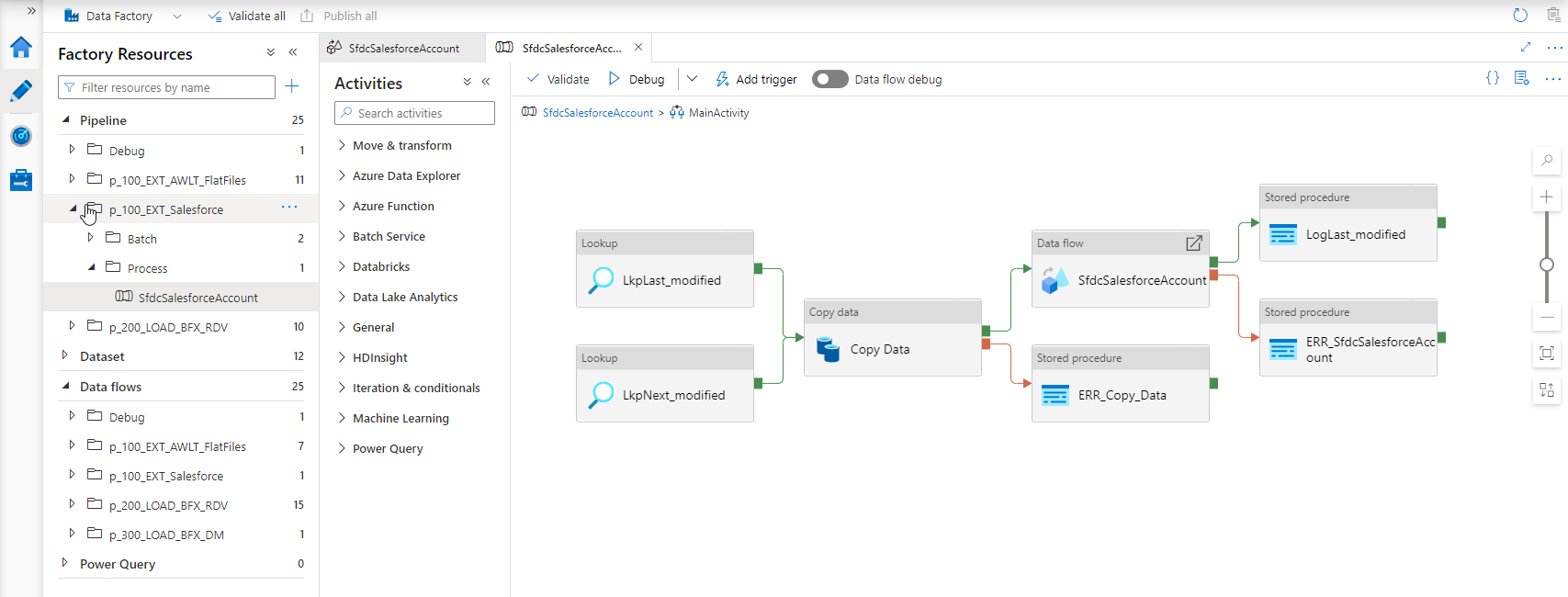

For example, to initially 'stage' data into the data solution patterns such as the ones below can be generated:

In both these cases, however, this relies on data flows to actually be able to directly connect to the data source. But, this is not always supported. The following link to the Microsoft documentation shows which technology is supported by the various areas of Azure Data Factory (ADF).

As Varigence, we still want to be able to enable our users to mix-and-match the BimlFlex project configuration using our supported data sources - even though this may not (yet) be supported directly by the ADF technology in question.

For example, BimlFlex supports using Salesforce REST APIs as data source, and this can be used to generate ADF pipelines that stage and process data further. If you would want to use data flows to implement complex transformations -for example using derived columns- for a Salesforce data source that would be an issue.

To make sure our users can still use data flows for data sources that are not (yet) natively supported, the BimlFlex engine has been updated to detect this. If this is the case, the generated output will include additional processing steps to first 'land' the data into a data lake or blob storage, and then process the data from here using data flows.

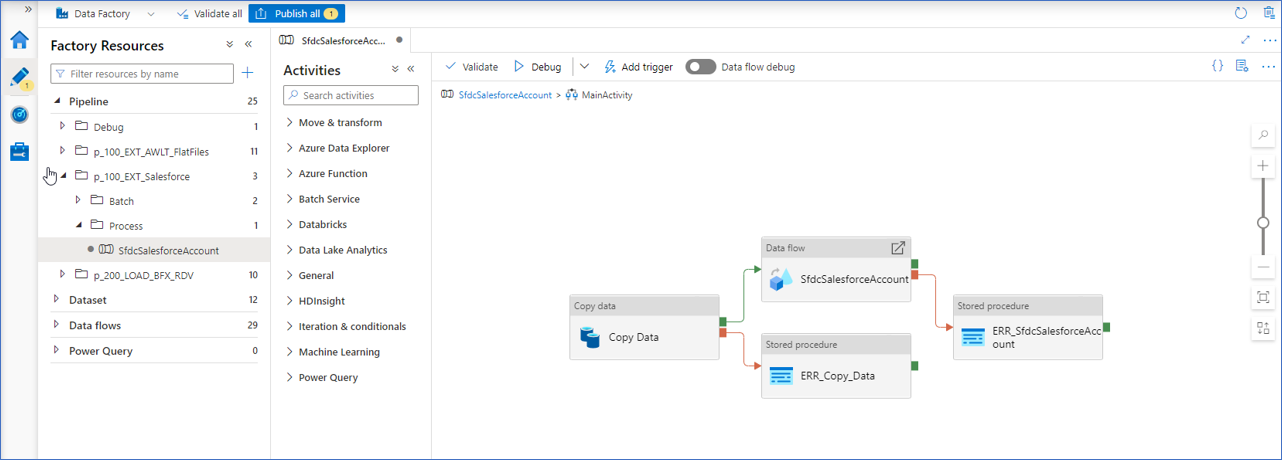

In practice, this means that an additional Copy Data activity will be added to the ADF pipeline that starts the data flow. The ADF pipeline acts as 'wrapper' for the data flow and already includes the interfaces to the runtime control framework (BimlCatalog).

To summarize if a BimlFlex is configured to use data flows and - contains a source connection that is supported, then a direct connection will be generated - contains a source connection that is not support, then an additional landing step will be generated

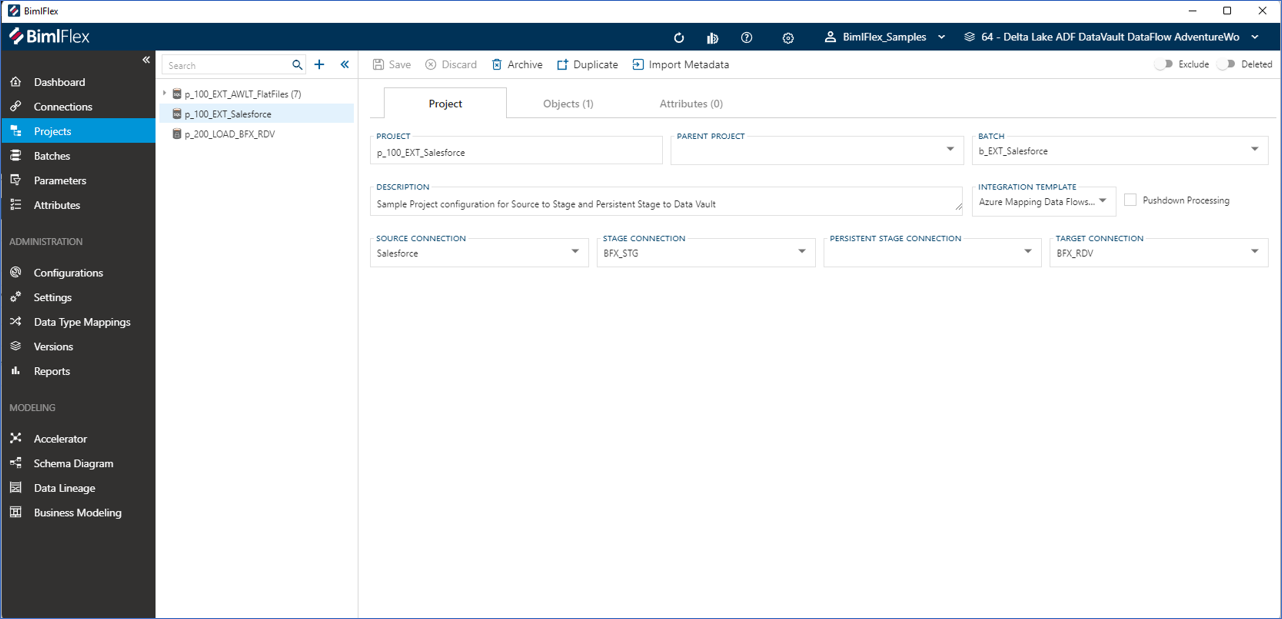

Consider the following project configuration:

In this example, a Salesforce connection is configured as source. A staging connection is also defined and ultimately the data will be integrated into a Data Vault model. No persistent staging area connection is configured for this project. Note that the integration stage is set to Mapping Data Flows. Because Salesforce connections are not natively supported for data flows, a landing step will be added by BimlFlex.

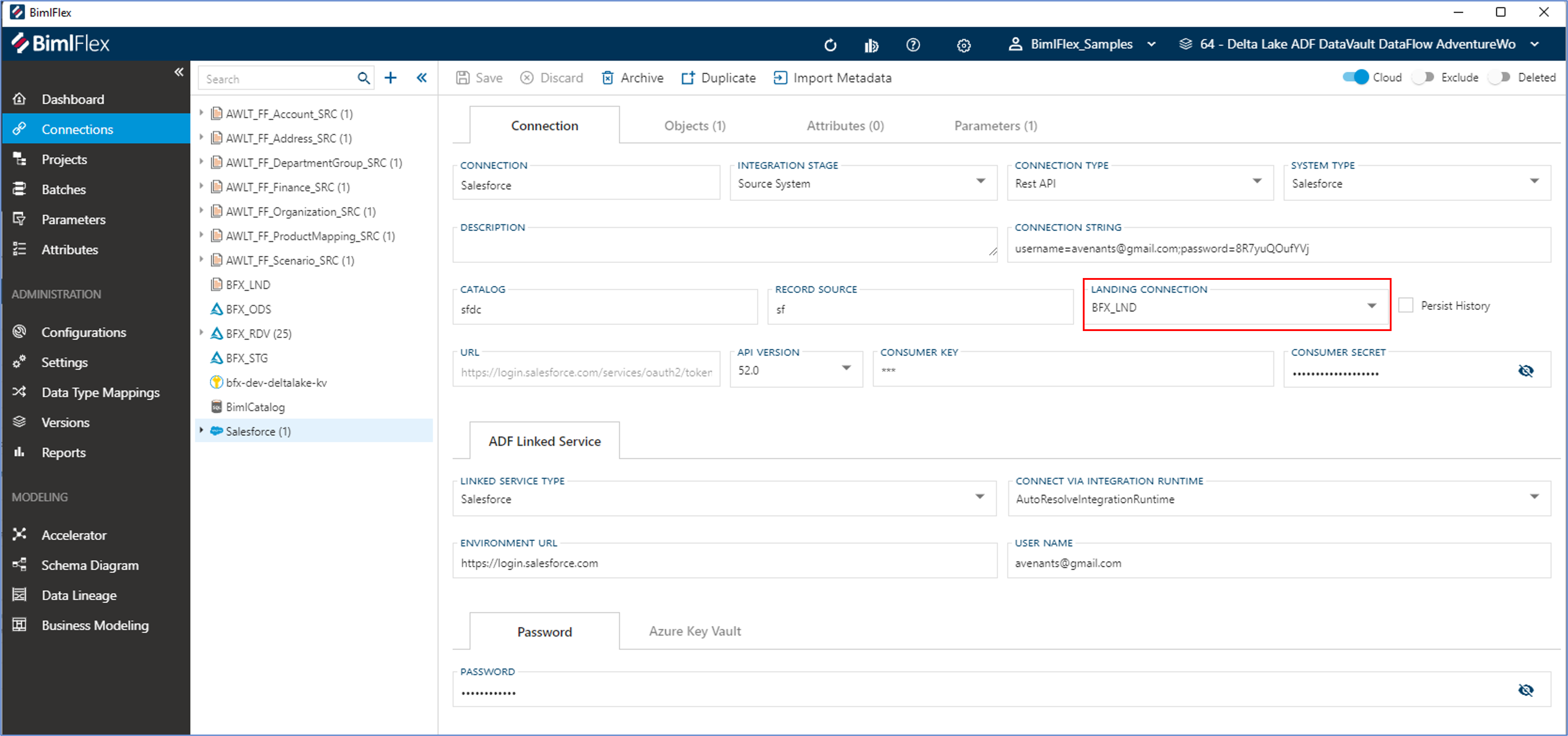

To direct BimlFlex where to land the data, the source connection will need to be configured with a landing connection. This is shown in the screen shot below.

The result in the generated output will appear as per the screenshot below. The Copy Data activity will land the data before the data flow will process these to the staging area.

Dev Diary - Adding Transformations to Mapping Data Flows

The Mapping Data Flows ('data flows') feature of Azure Data Factory (ADF) provides a visual editor to define complex data logistics and integration processes. Data flows provides a variety of components to direct the way the data should be manipulated, including a visual expression editor that supports a large number of functions.

Work is now nearing completion to make sure BimlFlex can incorporate bespoke logic using this expression language, and generate the corresponding Mapping Data Flows.

For every object in every layer of the designed solution architecture, it is possible to add derived columns. Derived columns are columns that are not part of the 'source' selection in a source-to-target data logistics context. Instead, they are derived in data logistics process itself.

This is done using Derived Column Transformations in Mapping Data Flows, in a way that is very similar to how this works in SQL Server Integration Services (SSIS).

In BimlFlex, you can define complex transformation logic this way using the data flow expression syntax. The resulting code will be added to the selected Mapping Data Flow patterns, and visible as Derived Column Transformations. You can also define dependencies between derived columns, for example that the output of a calculation is used as input for the next one.

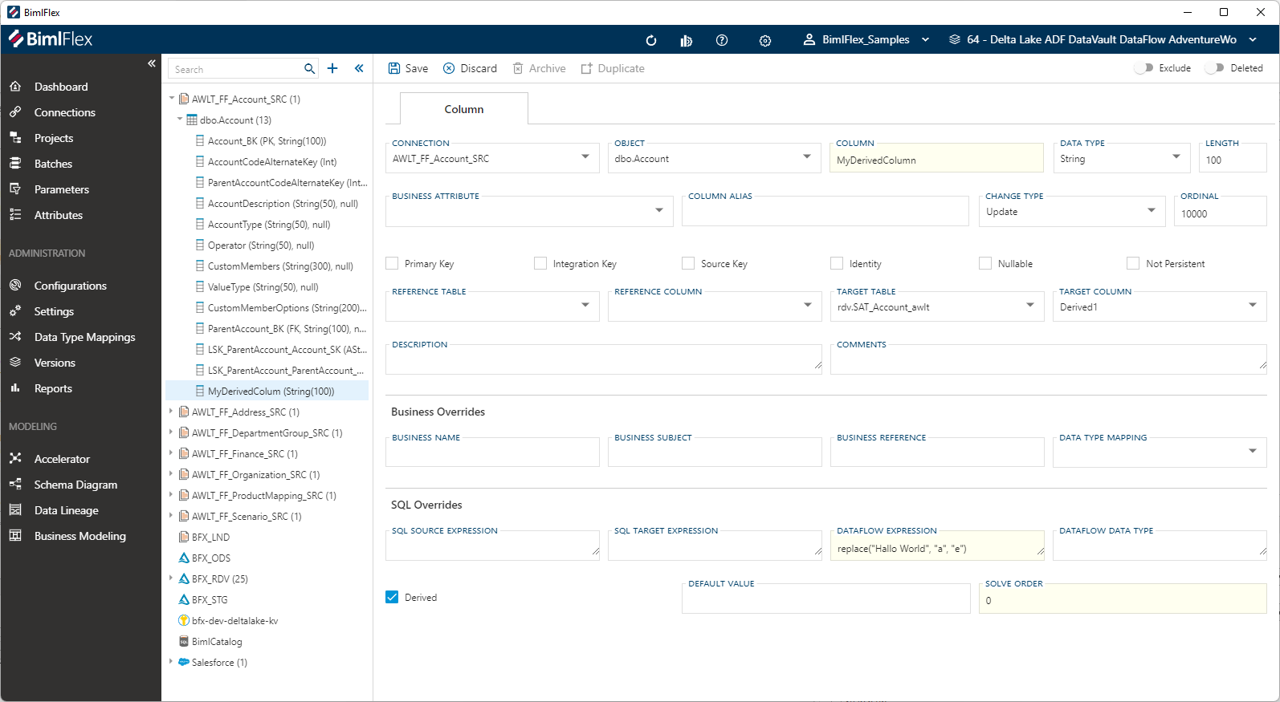

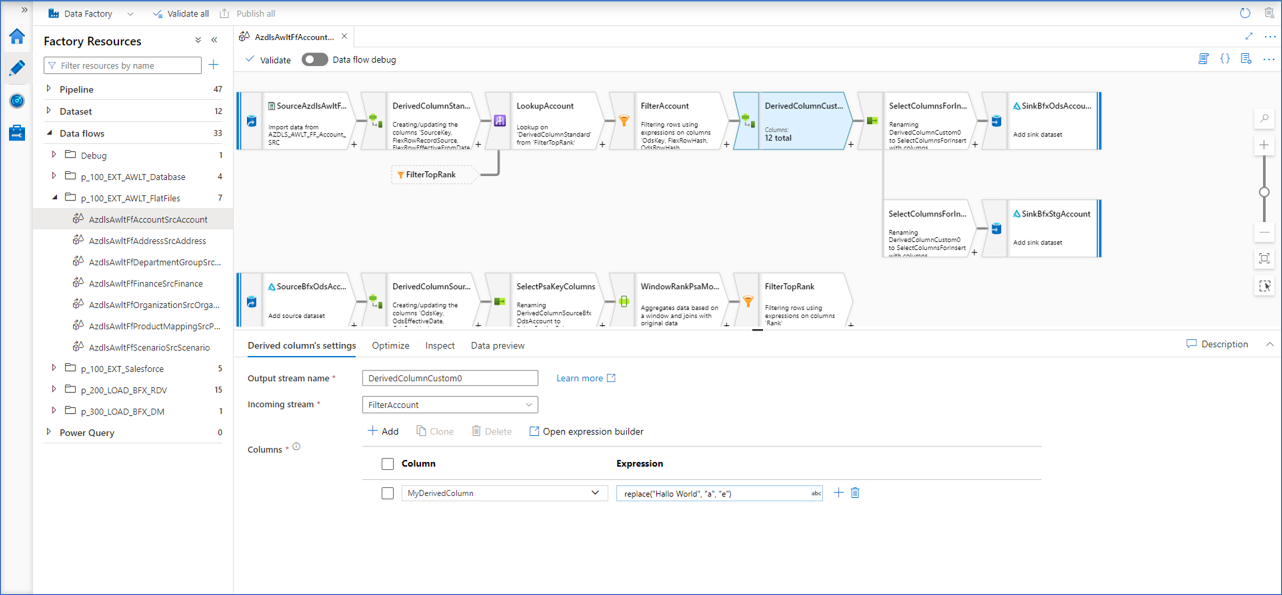

Consider the screenshot below.

The column 'MyDerivedColumn' is defined as part of the 'Account' object. The 'IsDerived' checkbox is checked, and the 'Dataflow Expression' is provided. This metadata configuration will generate a Derived Column Transformation with this column name, and the expression.

The resulting column is added to the output dataset.

In the first screenshot, the 'solve order' property was also set - with a value of 0.

The solve order directs BimlFlex in generating the logic in a certain (incremental) order. So a lower solve order will be created as an earlier Derived Colum Transformation, and a higher solve order later. The exact numbers do not matter, only that some are higher or lower than others.

If multiple derived columns are defined with the same solve order, they will be generated in the same Derived Column Transformation. This makes it possible to break apart complex logic in separate steps, and use them for different purposes.

This is one way to allow potentially complex logic to be defined in the metadata, and use this to generate consistent output that meets the requirements.