Dev Diary – Generating a Mapping Data Flow Staging process without a Persistent Staging Area

November 25, 2021

A few weeks ago, we covered the generation of a Mapping Data Flows Staging process for Azure Data Factory (ADF), using BimlFlex. This process uses a Persistent Staging Area (PSA) to assert if incoming data is new or changed by implementing a lookup and comparison.

There are some obvious limitations to this particular pattern. For example, this pattern does not support detecting records that have been physically deleted from the data source (a ‘logical’ delete), which may happen for certain data sources. The lookup to the PSA is the equivalent of a Left Outer Join in this sense.

And, generally speaking, not everyone needs or wants to implement a PSA in the first place.

This initial Staging pattern is just one of many patterns that are added to the Mapping Data Flows feature, because being able to use multiple patterns is necessary to develop and manage a real-world data solution.

It is not uncommon for a data solution to require various different Staging patterns to meaningfully connect to the source data across potentially many applications. Sometimes, multiple Staging patterns are needed even for a single data source.

This is because individual data sets may require to be accessed and staged in specific ways, such as for example the ability to detect the deleted records that were mentioned earlier. Some source data sets benefit from this ability, where others may explicitly not want this logic in place because this may result in a performance penalty without any functional gains.

Using BimlFlex, the design and configuration of the data solution directs what patterns will be used and where they are applied. In other words, different configurations result in different patterns being generated.

An example of how modifying the design in BimlFlex will change the Staging pattern is simply removing the PSA Connection from the Project.

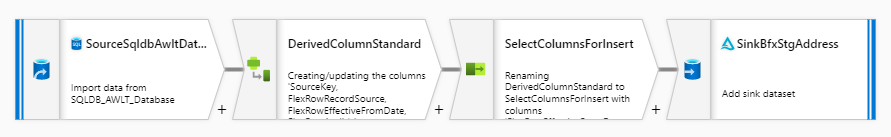

If there is a PSA Connection in place, the Mapping Data Flow will be generated with the PSA lookup and target (sink). Once you remove this Connection, the pattern that will be used is more of a straightforward data copy.

The team at Varigence is working through these and other configuration to make sure the necessary patterns are covered, including delete detection, landing data in blob storage and much more.