Varigence Blog

Delta Lake on Azure work in progress – introduction

At Varigence, we work hard to keep up with the latest in technologies so that our customers can apply these for their data solutions, either by reusing their existing designs (metadata) and deploying this in new and different ways or when starting new projects.

A major development focus recently has been to support Delta Lake for our BimlFlex Azure Data Factory patterns.

What is Delta Lake, and why should I care?

Delta Lake is an open-source storage layer that can be used ‘on top off’ Azure Data Lake Gen2, where it provides transaction control (Atomicity, Consistency, Isolation and Durability, or 'ACID') features to the data lake.

This supports a 'Lake House' style architecture, which is gaining traction because it offers opportunities to work with various kinds of data in a single environment. For example, combining semi- and unstructured data or batch- and streaming processing. This means various use-cases can be supported by a single infrastructure.

Microsoft has made Delta Lake connectors available for Azure Data Factory (ADF) pipelines and Mapping Data Flows (or simply ‘Data Flows’). Using these connectors, you can use your data lake to 'act' as a typical relational database for delivering your target models while at the same time use the lake for other use-cases.

Even better, using Data Flows can use integration runtimes for the compute without requiring a separate cluster that hosts Delta Lake. This is the inline feature and makes it possible to configure an Azure Storage Account (Azure Data Lake Gen2) and use the Delta Lake features without any further configuration.

Every time you run a one or more Data Flows, Azure will spin up a cluster for use by the integration runtime in the background.

How can I get started?

Creating a new Delta Lake is easy. There are various ways to implement this, which will be covered in subsequent posts.

For now, the quickest way to have a look at these features is to create a new Azure Storage Account resource of type Azure Data Lake Storage Gen2. For this resource, the ‘Hierarchical Namespace’ option must be enabled. This will enable directory trees/hierarchies on the data lake – a folder structure in other words.

Next, in Azure Data Factory, a Linked Service must be created that connects to the Azure Storage Account.

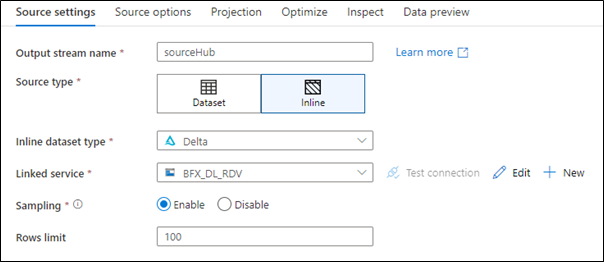

When creating a new Data Flow in Azure Data Factory, this new connection can be used as an inline data source with the type of ‘Delta’.

For example, as shown in the screenshot below:

This is all that is needed to begin working with data on Delta Lake.

What is Varigence working on now?

The Varigence team has updated the BimlFlex App, its code generation patterns and BimlStudio to facilitate these features. Work is underway to complete the templates and make sure everything works as expected.

In the next post we will investigate how these concepts translate into working with BimlFlex to automate solutions that read from, or write to, a Delta Lake.

Important BimlFlex documentation updates

Having up-to-date documentation is something we work on every day, and recently we have made significant updates in our BimlFlex documentation site.

Our reference documentation will always be up to date, because this is automatically generated from our framework design. Various additional concepts have been explained and added.

For example, a more complete guide to using Extension Points has been added. Extension Points are an important feature to allow our customers to both use the out-of-the-box framework as well as making all kinds of customizations as needed for any specific needs that may arise.

Have a look at the following topics:

- Extensions Points, also with links to updated reference documentation on each Extension Point.

- Delivering an Azure Data Factory solution using BimlFlex.

- An overview of the key BimlFlex mechanisms.

- BimlFlex Settings and Overrides.